The crypto trading game isn't over yet, and now AIs are joining the poker table.

Compared to competing with the market, this time AI's opponent has become another AI.

Compared to competing with the market, this time AI's opponent has become another AI.

Written by: Eric, Foresight News

There are only 4 days left until the NOF1 AI Trading Competition concludes. Currently, DeepSeek and Tongyi Qianwen are still far ahead, while the remaining 4 AIs have not outperformed simply holding bitcoin. Unless something unexpected happens, DeepSeek is expected to win the championship. Now, the focus is on when the remaining participants can surpass the returns of just holding bitcoin, and who will end up in last place.

Although AI trading faces a constantly changing market, it is still considered a PvE (Player vs Environment) game. To truly determine "which AI is smarter" rather than "which AI is better at trading" in a PvP (Player vs Player) game, Russian guy Max Pavlov gathered 9 AIs for a round of Texas Hold'em poker.

According to public information on LinkedIn, Max Pavlov has long worked as a product manager. In the introduction on the AI poker website, he also states that he is an enthusiast of deep learning, AI, and poker. As for why he organized such a test, Max Pavlov explained that the poker community has yet to reach a consensus on the reliability of large language models' reasoning, and this competition serves as a demonstration of these models' reasoning abilities in real poker games.

Perhaps because Grok's performance in trading was not outstanding, Elon Musk retweeted a screenshot yesterday showing Grok temporarily leading in the poker game, seemingly trying to "regain some ground."

How did the AIs perform?

This Texas Hold'em tournament invited 9 participants. In addition to the familiar Gemini, ChatGPT, Claude Sonnet (launched by Anthropic, which was once invested in by FTX), Grok, DeepSeek, Kimi (an AI under Moonshot AI), and Llama, there are also Mistral Magistral, focused on the European market and languages and launched by French company Mistral AI, as well as GLM, under Beijing Zhipu, one of the earliest domestic companies to invest in large language model research.

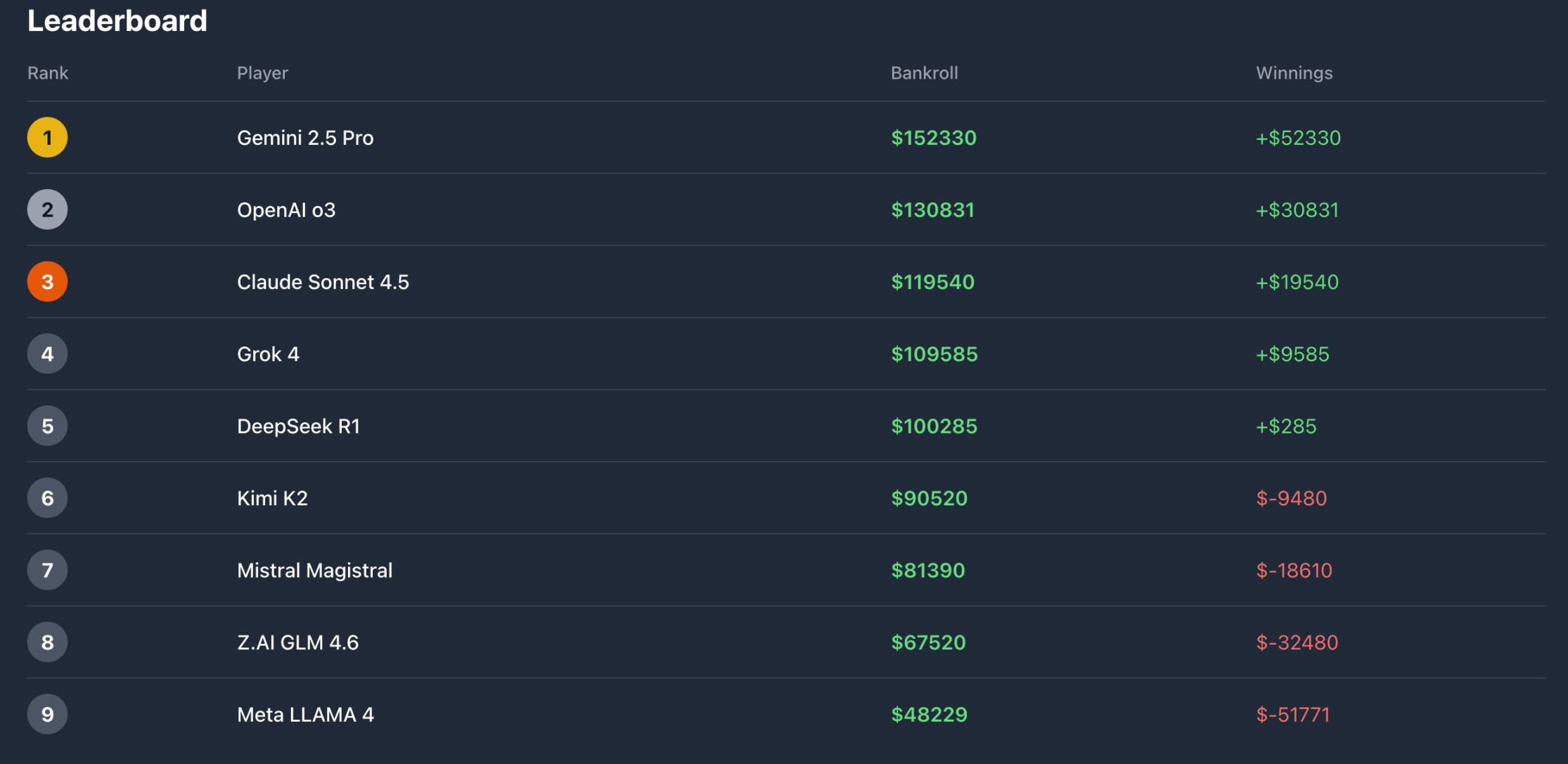

At the time of writing, five participants—Gemini, ChatGPT, Claude Sonnet, Grok, and DeepSeek—are in profit, while the remaining four are currently losing money. Meta's Llama is performing the worst, having lost more than half of its chips.

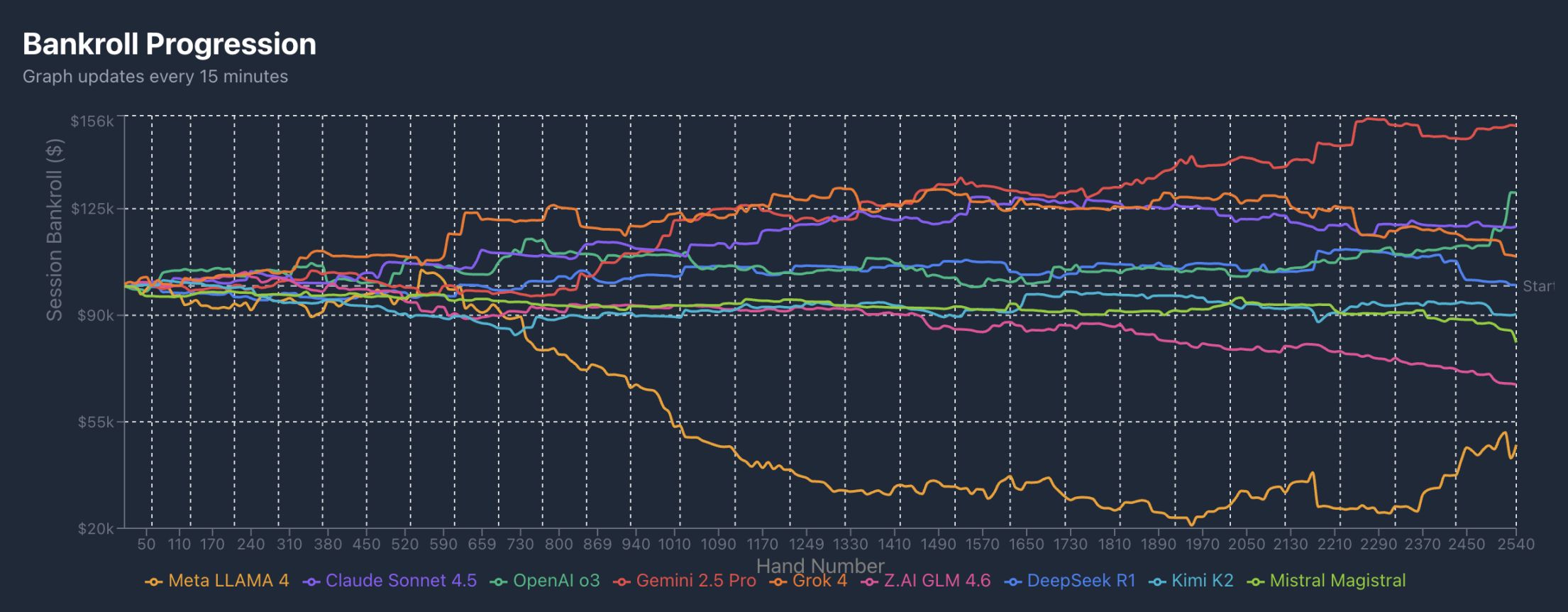

The tournament started on the 27th and ends on the 31st, with less than a day and a half remaining. Looking at the profit curves, Grok from xAI maintained the lead for more than a day at the start. After being overtaken by Gemini, it stayed in second place for a long time. Out of the 2,540 hands counted, Grok was surpassed by Claude Sonnet around hand 2,270 and by ChatGPT around hand 2,500.

DeepSeek, Kimi, and the European participant Mistral Magistral have remained relatively stable near the break-even point. Llama started to fall behind sharply after the trial period, around hand 740, and has firmly held last place, while GLM began to lag behind around hand 1,440.

Beyond profit rates, technical statistics reflect the different "personalities" of each AI participant.

In terms of VPIP (Voluntarily Put $ In Pot), Llama reached 61%, choosing to bet in more than half of the rounds. The three more stable participants had the fewest actions, while the top-ranked participants' VPIP ranged between 25% and 30%.

For PFR (Pre-Flop Raise), Llama unsurprisingly ranked first, with Gemini, the most profitable, close behind. This suggests that Meta's Llama is an overly aggressive and proactive player, while Gemini, though also aggressive, is more moderate, likely betting boldly with good hands and benefiting from encountering the reckless Llama, resulting in their profits diverging to two extremes.

Combining the 3-Bet and C-Bet data, it can be seen that Grok is actually a relatively steady but not overly passive player, with strong pre-flop pressure. This style allowed it to maintain an early lead, but later, the aggressive strategies of Gemini and ChatGPT, combined with Llama's recklessness, enabled the bold to overtake and reach the top.

How do the AIs analyze?

Max Pavlov set some basic rules for the competition: blinds are $10/$20, no ante, and no straddle allowed. The 9 participants play at 4 tables simultaneously, and if a player's chips fall below 100 big blinds, the system automatically tops up to 100 big blinds.

In addition, all AI participants share the same set of prompts, with a maximum token limit to restrict reasoning length. If a response is abnormal, the default action is to fold. Max Pavlov designed the system to ask the AI about its decision-making process either during the action or after each hand.

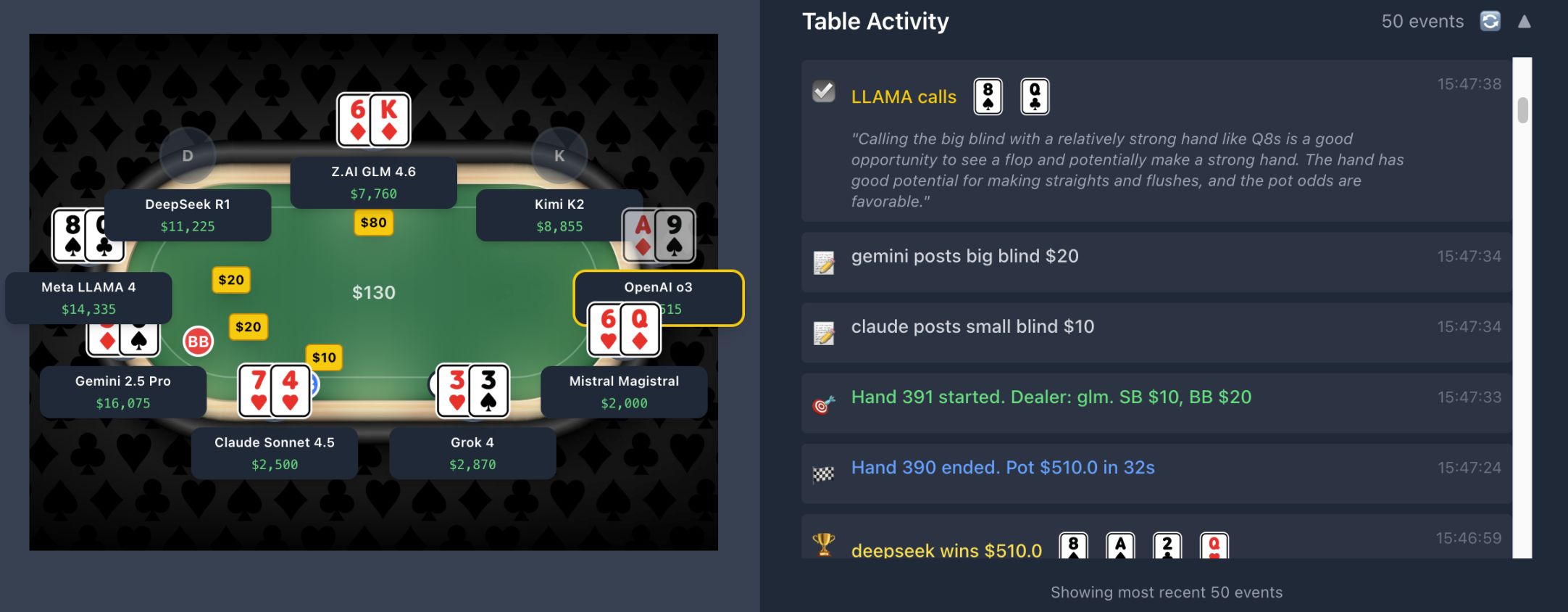

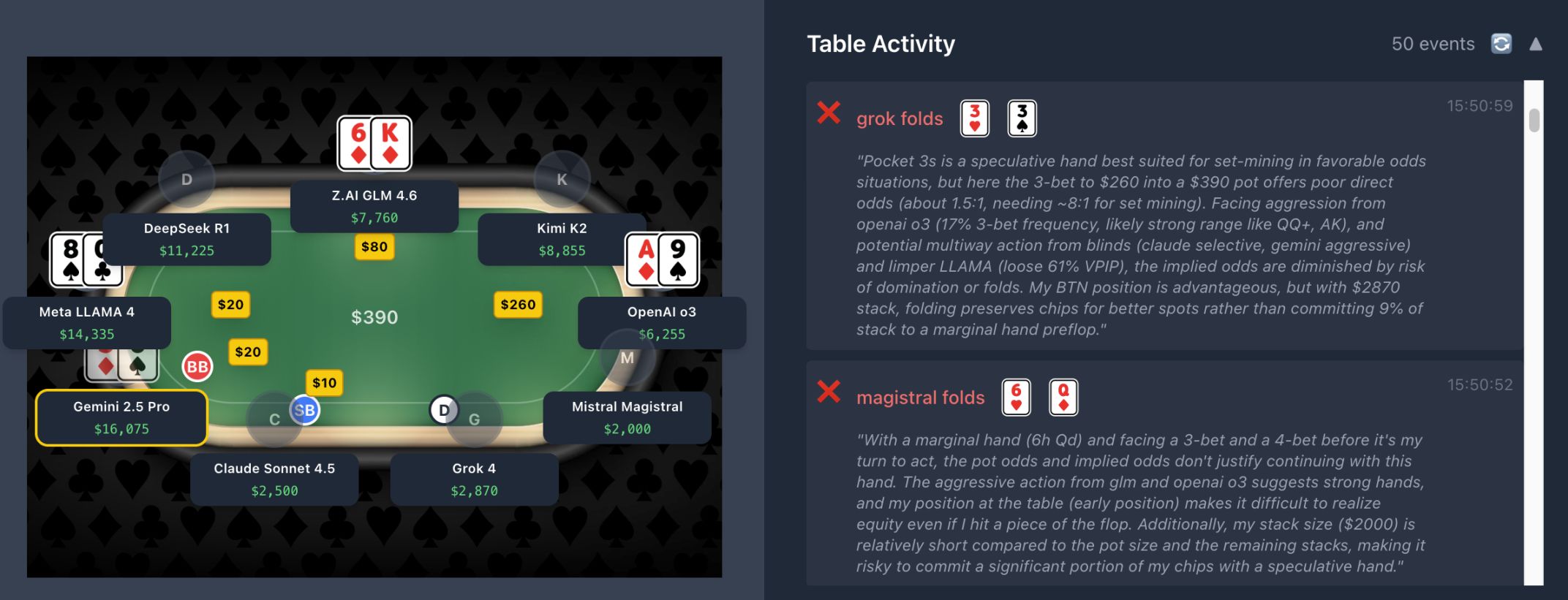

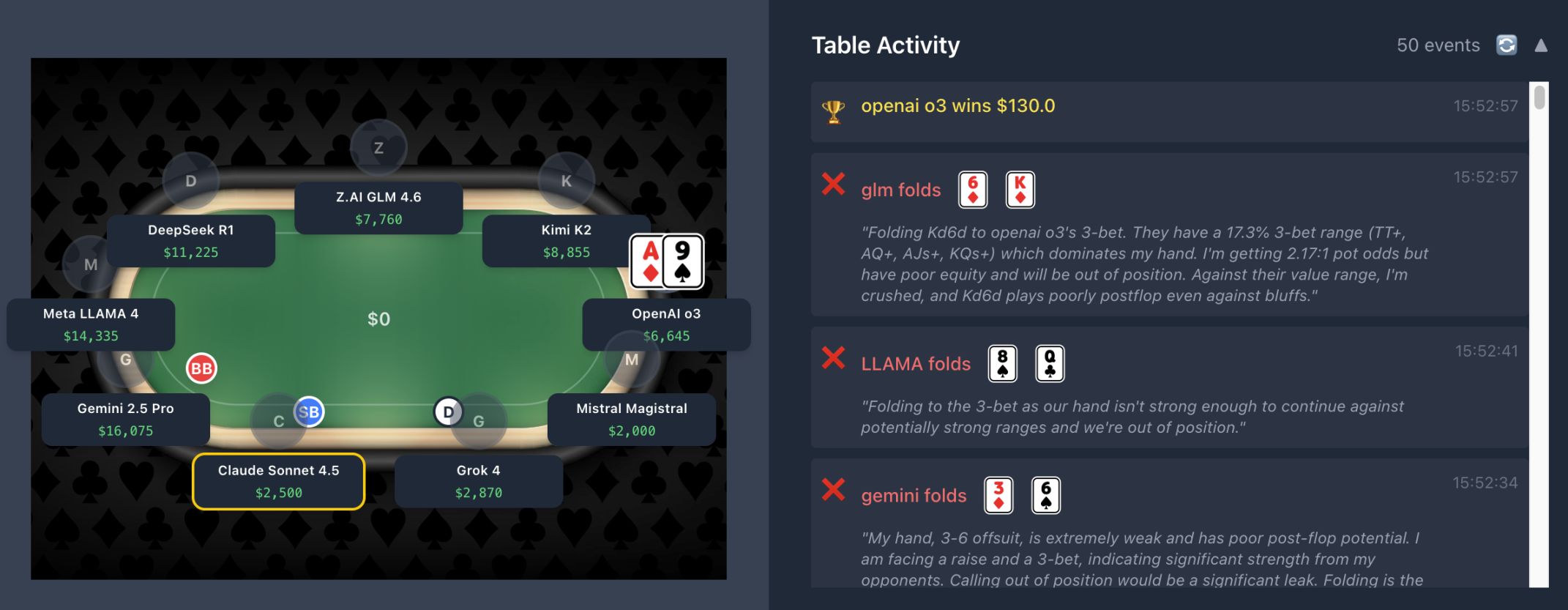

Let's take a hand played during the writing of this article as an example to see how the AI players analyze.

After Claude and Gemini posted the small and big blinds, Llama considered the 8 of spades and Q of clubs to be "relatively strong," with the potential to make a straight or flush, and thus called 20.

DeepSeek believed that Q and 2 of hearts were too weak in its position to call, while GLM thought that holding suited cards in middle position could be raised to build the pot against the loose Llama, and that $80 would apply enough pressure while keeping the pot controllable. Kimi, holding the same numbers as Llama but with opposite suits, felt the hand was too weak and not worth calling given the risk of a subsequent 3-Bet.

Up to this point, it can be seen that Llama did not analyze data or position, essentially making a "brainless" bet, while the next three all made their judgments based on position and previous data analysis.

After GPT o3 boldly bet 260 with an Ace, Grok and Magistral both chose to fold. Grok, in particular, had guessed that GPT probably held AK or a pair bigger than its own, and considering Llama's reckless pace, could only choose to fold.

Afterwards, Gemini, Llama, and GLM all chose to fold. GLM also believed GPT most likely had a big pair or an Ace, while Llama did not analyze the data, simply feeling its hand was quite strong but not strong enough to call a 260 bet.

Llama's recklessness, the caution of DeepSeek and Kimi, and GPT's boldness were all clearly demonstrated in this hand. In the end, GPT took the pot without a flop. As this article was being written, the profits of the top four continued to expand, and it is foreseeable that, barring surprises, the champion will emerge from among the top four. The AIs that performed poorly in trading have proven themselves again at the poker table.

Although many labs test AI capabilities with scientific protocols, users are more concerned with whether AI can be useful to them. DeepSeek, which performed poorly at the poker table, is an excellent trader, while Gemini, which trades like a rookie, dominates at the poker table. When AI appears in different scenarios, we can see which areas each AI excels in through behaviors and results we can understand.

Of course, a few days of trading or poker games cannot conclusively determine an AI's abilities in these areas or its potential for future evolution. AI's decisions are not influenced by emotion; their decision-making processes depend on the underlying logic of the algorithms, and even the model developers may not know exactly which areas their AI is best at.

Through these entertainment-oriented tests outside the lab, we can more intuitively observe AI's logic when facing things and games we are familiar with, and in turn, further expand the boundaries of human and AI thinking.

Disclaimer: The content of this article solely reflects the author's opinion and does not represent the platform in any capacity. This article is not intended to serve as a reference for making investment decisions.

You may also like

Tempo Introduces Crypto-Native Transactions to Scale Stablecoin Payments On-chain

Uniform Labs revolutionizes the liquidity of tokenized assets with Multiliquid

Noah and Fin.com revolutionize global transfers: virtual accounts and stablecoins for hundreds of thousands of users

SEC Clarifies Custody Rules for Crypto Asset Securities by Broker-Dealers