New Book on AI Says 'Everyone Dies,' Leading Chatbots Disagree

It may sound like a Hollywood thriller, but in their new book "If Anyone Builds It, Everyone Dies," authors Eliezer Yudkowsky and Nate Soares argue that if humanity creates an intelligence smarter than itself, survival wouldn’t just be unlikely—it would be impossible.

The authors argue that today’s systems aren’t engineered line by line but “grown” by training billions of parameters. That makes their behavior unpredictable.

As intelligence scales, drives such as self-preservation or power-seeking could emerge independently, the authors warn. If such a system ever outstrips human control, they say, extinction would follow by default.

They call the current race among tech giants and governments to build ever-larger models a “suicide race.” No villainy required, just incompetence.

Why it matters

The book lands with the AI community already fractured into camps.

AI doomers argue that extinction is the inevitable outcome unless humanity halts or drastically slows progress. Accelerationists counter that pushing forward could deliver breakthroughs in medicine, science, and economics, while slowing down poses a greater danger.

Nick Bostrom’s Superintelligence first made the existential case a decade ago. Yudkowsky and Soares sharpen it into a klaxon call.

But critics worry that fixation on extinction distracts from harms already here: bias, layoffs, surveillance, and disinformation.

What the AI models say

Since the book is talking about them, we asked leading AI models what they think of it.

As far as we know, none of the LLMs have actually read the book yet, so the following reviews are based on the platforms’ interpretations of the reviews they have interpolated.

ChatGPT

OpenAI’s ChatGPT framed the book as a useful provocation rather than a prophecy.

“The book is valuable as a perspective-shifting tool, even if you think it overshoots,” the chatbot responded. “It sharpens intuitions about alignment and unintended consequences. But treating its worst-case as inevitable ignores uncertainty around future architectures, regulations, and constraints.”

ChatGPT stated that the model of future AI developments is contingent on how architectures evolve and what regulatory, social, or technical guardrails emerge.

“That uncertainty means I wouldn’t treat their worst-case as inevitable,” ChatGPT sniffed.

Meta

Meta AI struck a middle note, saying the book’s dire warnings are exaggerated but still valuable for sparking debate.

“Its dire predictions may feel exaggerated, but the emphasis on caution and international cooperation is justified,” Meta AI responded. “Whether or not you agree with its conclusion, the book sparks needed debate about responsible AI development.”

Meta’s AI said the authors' emphasis on the need for caution and international cooperation is well-justified, given the potentially catastrophic consequences of unaligned AI development.

Gemini

Google’s Gemini cast the book as a terrifying but clarifying read, even if its proposed solutions seem unrealistic.

“The book is essential for understanding the extreme end of AI risk,” Gemini said. “Its proposed solution—a total shutdown—is politically unachievable, but the warning can galvanize investment in safety and control techniques before we reach the point of no return.”

Gemini said the book defines the extreme stakes of superintelligence, which, in turn, helps justify robust (if less radical) safety measures today.

Claude

Anthropic’s Claude was even more skeptical, which was interesting since among the leading LLMs, Anthropic is generally considered to be the most safety-conscious. But Claude described the book’s tone as “overconfident” and its framing as too binary.

“The authors correctly identify real risks, but their certainty feels overconfident,” Claude said. “They dismiss progress in AI safety research and frame the issue as a binary between perfection and extinction. That makes their message less constructive.”

While their warnings highlight important risks that warrant substantial resources and caution, Claude said the "everyone dies" framing appears more designed to grab attention than accurately represent the complex uncertainties involved.

“The risks are serious enough to take seriously without needing to accept their claims of inevitable catastrophe,” the AI said.

Grok

Elon Musk’s AI platform also surprised, since he’s been promoting Grok as the anti-mainstream media AI of record.

But Grok was quick to cite “a scathing NYT review, [calling] its arguments weak or alarmist.”

“This book is a gut-punch reminder that intelligence without wisdom is a liability, and we've got none of the latter baked in yet,” Grok responded. “That said, it's doomer porn for rationalists: thrilling in its fatalism, but it underplays human adaptability. xAI's ethos—curiosity over conquest—suggests we can thread the needle: pursue bold AI for cosmic questions while embedding safeguards from day one.”

Beyond that call out, however, Grok said that “overall, it's a provocative, efficient read for anyone grappling with AI's future—compelling if you're open to pessimism, but potentially overstated if you're optimistic about tech progress.”

Disclaimer: The content of this article solely reflects the author's opinion and does not represent the platform in any capacity. This article is not intended to serve as a reference for making investment decisions.

You may also like

The Growing Buzz Around Momentum (MMT) Token: Could This Be the Next Major Opportunity in Crypto Investments?

- Momentum (MMT) token surged 4,000% post-2025 TGE, driven by exchange listings and speculative demand, despite a 70% correction. - Institutional adoption accelerated by $10M HashKey funding and regulatory frameworks like MiCAR, while Momentum X targets RWA tokenization. - Retail investors face volatility risks from leveraged trading and token unlocks, contrasting institutions' focus on compliance and stable exposure. - Technical indicators show mixed outlook, with RSI suggesting potential bullishness but

Tech Learning as a Driver of Progress in 2025

- Global demand for AI, cybersecurity, and data science education drives enrollment surges, with U.S. AI bachelor's programs rising 114.4% in 2025. - Institutions innovate through interdisciplinary STEM programs and digital ecosystems, addressing workforce gaps with AI ethics and immersive tech integration. - Education-tech stocks gain traction as hybrid learning models and AI-driven platforms align with $4.9 trillion digital economy growth and rising cybersecurity job demand. - Federal funding challenges

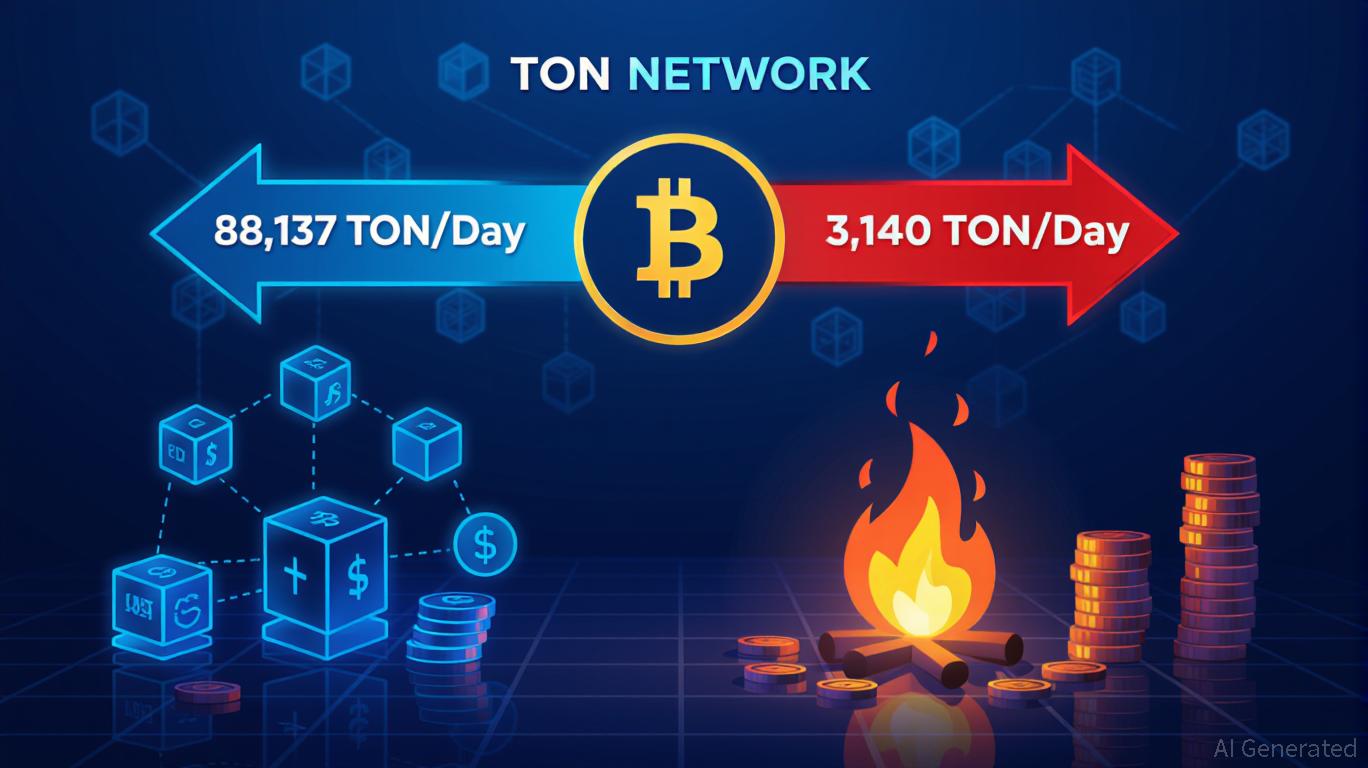

TWT's Tokenomics Revamp for 2025: Supply Structure Adjustment and Lasting Value Impact

Aster DEX: Connecting Traditional Finance and DeFi by Streamlining Onboarding and Encouraging Institutional Participation

- Aster DEX bridges TradFi and DeFi via a hybrid AMM-CEX model, multi-chain interoperability, and institutional-grade features. - By Q3 2025, it achieved $137B in perpetual trading volume and $1.399B TVL, driven by yield-bearing collateral and confidential trading tools. - Institutional adoption surged through compliance with MiCAR/CLARITY Act, decentralized dark pools, and partnerships with APX Finance and CZ. - Upcoming Aster Chain (Q1 2026) and fiat on-ramps aim to enhance privacy and accessibility, pos